I’m going to change what I write. I’m using new tools that allow me to pull together all the various articles and blog posts that I’ve written in the past into comprehensive papers or articles.

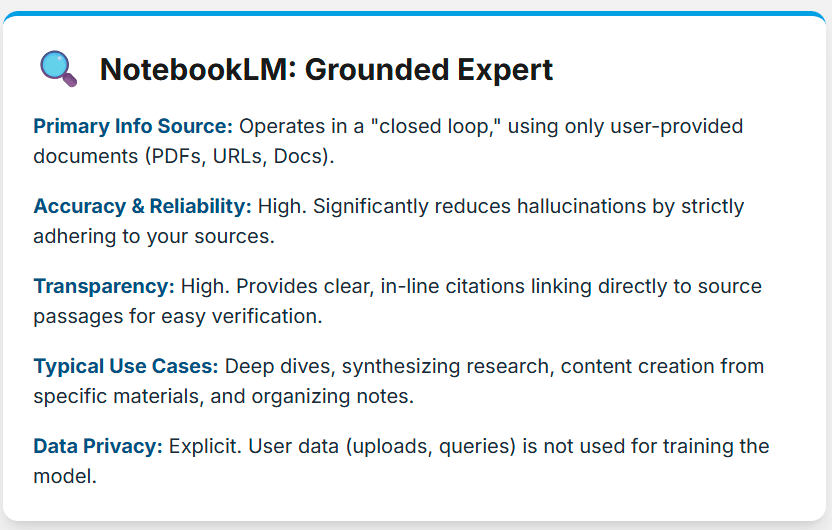

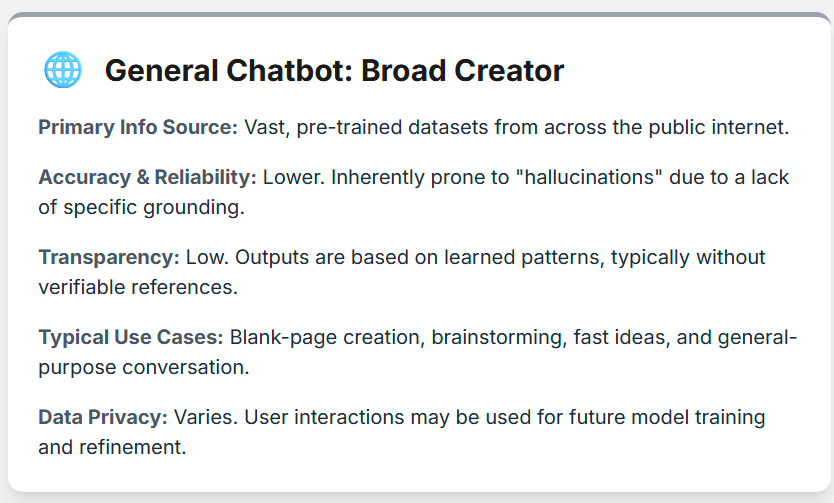

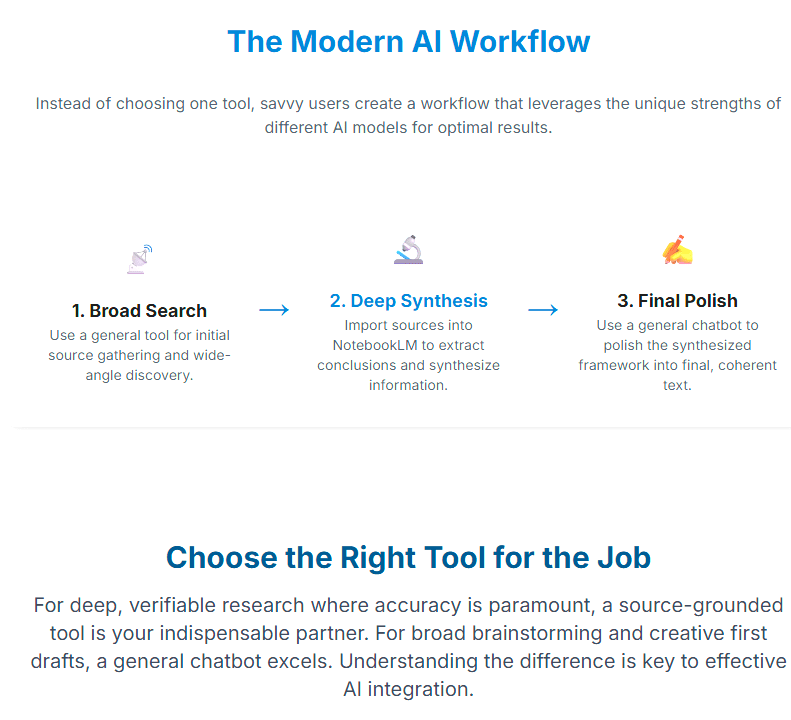

I am going to begin sharing a lot of the analysis I obtain from doing research with two relatively new Google artificial intelligence (AI) applications, Gemini and NotebookLM. I realize many people have negative experiences or ideas about AI. What follows is an explanation of two philosophies of artificial intelligence. I’m using NotebookLM because it is source-grounded or “closed loop”, the difference explained in the following.

Gemini 2.5 Flash

Gemini 2.5 Flash is a highly efficient and fast AI model developed by Google, part of the Gemini 2.5 family.1 It’s designed to be a “workhorse” model, excelling at high-volume, latency-sensitive tasks where speed and cost-efficiency are paramount.2

Here’s a breakdown of its key characteristics:

- Speed and Efficiency: As a “Flash” model, its primary focus is on delivering rapid responses with low latency.3 This makes it ideal for real-time applications, such as chatbots, summarization, classification, and quick Q&A.4 It’s optimized to use fewer computational resources compared to more complex models.5

- “Thinking Capabilities”: A notable feature of Gemini 2.5 Flash (and the 2.5 family in general) is its “thinking capabilities.” This allows the model to go through a reasoning process before generating its response. While optimized for speed, it can still engage in a degree of internal deliberation to produce more accurate and nuanced outputs. You can even sometimes view this thinking process.

- Controllable Thinking Budget: Developers have the ability to control the “thinking budget” for Gemini 2.5 Flash.6 This means you can adjust how much the model “thinks” before responding, allowing for a balance between speed, cost, and accuracy depending on the specific use case.7 For instance, for very quick and simple tasks, you might reduce the thinking budget to maximize speed.8

- Multimodality: Gemini 2.5 Flash is inherently multimodal, meaning it can understand and process information across various formats, including text, images, audio, and video.9

- Long Context Window: It supports a large context window, typically around 1 million tokens, which allows it to process and understand vast amounts of information within a single prompt.10 This is beneficial for tasks like summarizing long documents or analyzing large datasets.11

- Tool Integration: Gemini 2.5 Flash can integrate with various tools, including Google Search and Code Execution.12 This allows it to access real-time information from the web or execute code to solve problems, making its responses more factual and capable.13

- Use Cases: It’s well-suited for a wide range of applications, including:

- Summarization: Quickly summarizing long articles, documents, or conversations.14

- Chat Applications: Powering responsive and efficient chatbots.15

- Data Extraction: Extracting specific information from unstructured text or documents.16

- Captioning: Generating descriptions for images or videos.17

- Translation: Providing quick and accurate language translations.18

- Real-time interactions: Any application requiring instant AI responses.19

Gemini 2.5 Flash vs. Gemini 2.5 Pro:

While both are part of the Gemini 2.5 family and share core architectural elements and multimodality, they are optimized for different purposes:

- Gemini 2.5 Flash: Prioritizes speed, cost-efficiency, and high throughput.20 It’s the “workhorse” for tasks where rapid, high-volume processing is crucial.21 While it has reasoning capabilities, they are often balanced for speed.22

- Gemini 2.5 Pro: Focuses on deeper reasoning, higher accuracy, and handling more complex tasks.23 It’s Google’s most advanced model for scenarios requiring extensive analysis, complex coding, and intricate problem-solving. It might be slower and more resource-intensive than Flash, but it delivers superior quality for demanding applications.

In essence, Gemini 2.5 Flash provides a powerful yet lightweight AI solution, making advanced generative AI capabilities accessible for a broad range of real-time and cost-sensitive applications.24